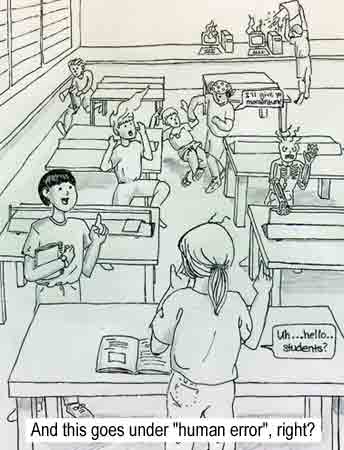

Like the student in the cartoon, most people have the idea

that an error is a mistake or a mishap. In science, the word

"error" has an entirely different meaning. When we say "error"

we are talking about the fact that we cannot take measurements to infinite

precision.

Definition of error: The error or uncertainty in a value

is the range in which we are reasonably certain the value lies. (What

I mean by "reasonably certain" is covered in the last two sections.)

For example, if I asked you to measure the length of a pen, you

might get out your ruler, and declare the pen is 18.2 cm long. You

wouldn't say that the pen is 18.2021...34 cm long, with an infinite

number of decimal places. Why not? The obvious answer is

that the ruler only has so much precision. You can only measure

to the nearest division on the ruler, or perhaps half of one division

if you are really careful.

Before we get into how to measure and report these uncertainties, let

me first give you some incentive as to why we need to calculate

them in the next section.