Instead of limiting analysis to best case or worst case, analyze all cases based on a distribution of the probability of each case.

We implicitly used probabilistic analysis when we said that assuming uniform distribution of keys it takes n/2 comparisons on average to find an item in a linked list of n items.

The book's example is a little strange but illustrates the points well. Suppose you are using an employment agency to hire an office assistant.

Hire-Assistant(n) 1 best = 0 // fictional least qualified candidate 2 for i = 1 to n 3 interview candidate i // paying cost ci 4 if candidate i is better than candidate best 5 best = i 6 hire candidate i // paying cost ch

What is the cost of this strategy?

If each candidate is worse than all who came before, we hire one candidate:

Θ(cin + ch) = Θ(cin)

If each candidate is better than all who came before, we hire all n (m =

n):

Θ(cin + chn) = Θ(chn) since

ch > ci

But this is pessimistic. What happens in the average case? To figure this out,

we need some tools ...

We don't have this information for the Hiring Problem, but suppose we could assume that candidates come in random order. Then the analysis can be done by counting permutations:

We might not know the distribution of inputs or be able to model it.

However, uniform random distributions are easier to analyze than unknown distributions.

To obtain such a distribution regardless of input distribution, we can randomize within the algorithm to impose a distribution on the inputs. Then it is easier to analzye expected behavior.

An algorithm is randomized if its behavior is determined in parts by values provided by a random number generator.

This requires a change in the hiring problem scenario:

Thus we take control of the question of whether the input is randomly ordered: we enforce random order, so the average case becomes the expected value.

Here we introduce a technique for computing the expected value of a random variable, even when there is dependence between variables. Two informal definitions will get us started:

A random variable (e.g., X) is a variable that takes on any of a range of values according to a probability distribution.

The expected value of a random variable (e.g., E[X]) is the average value we would observe if we sampled the random variable repeatedly.

Given sample space S and event A in S, define the indicator random variable

We will see that indicator random variables simplify analysis by letting us work with the probability of the values of a random variable separately.

For an event A, let XA = I{A}. Then the expected value E[XA] = Pr{A} (the probability of event A).

Proof: Let ¬A be the complement of A. Then

E[XA] = E[I{A}] (by definition)

= 1*Pr{A} + 0*Pr{¬A} (definition of expected value)

= Pr{A}.

What is the expected number of heads when flipping a fair coin once?

What is the expected number of heads when we flip a fair coin n times?

Let X be a random variable for the number of heads in n flips.

We could compute E[X] = ∑i=0,ni Pr{X=i} -- that is, compute and add the probability of there being 0 heads total, 1 head total, 2 heads total ... n heads total, as is done in C.37 in the appendix and in my screencast lecture 5A -- but it's messy!

Instead use indicator random variables to count something we do know the probability for: the probability of getting heads when flipping the coin once:

It's very important that you understand the above before going on:

Assume that the candidates arrive in random order (we can enforce that by randomization if needed).

Let X be the random variable for the number of times we hire a new office assistant.

Define indicator random variables X1, X2, ... Xn where Xi = I{candidate i is hired}.

We will rely on these properties:

We need to compute Pr{candidate i is hired}:

By Lemma 1, E[Xi] = 1/i, a fact that lets us compute E[X]

(notice that this follows the proof pattern outlined above!):

The sum is a harmonic series. From formula A7 in appendix A, the

nth harmonic number is:

Thus, the expected hiring cost is Θ(ch ln n), much better than worst case Θ(chn)! (ln is the natural log. Formula 3.15 of the text can be used to show that ln n = Θ(lg n.)

We will see this kind of analysis repeatedly. Its strengths are that it lets us count in ways for which we have probabilities (compare to C.37), and that it works even when there are dependencies between variables.

This is Exercise 5.2-5 page 122, for which there is a publicly posted solution. This example shows the great utility of random variables: study it carefuly!

Let A[1.. n] be an array of n distinct numbers. If i < j and A[i] > A[j], then the pair (i, j) is called an inversion of A (they are "out of order" with respect to each other). Suppose that the elements of A form a uniform random permutation of ⟨1, 2, ... n⟩.

We want to find the expected number of inversions. This has obvious applications to analysis of sorting algorithms, as it is a measure of how much a sequence is "out of order". In fact, each iteration of the while loop in insertion sort corresponds to the elimination of one inversion (see the posted solution to problem 2-4c).

If we had to count in terms of whole permutations, figuring out how many permutations had 0 inversions, how many had 1, ... etc. (sound familiar? :), that would be a real pain, as there are n! permutations of n items. Can indicator random variables save us this pain by letting us count something easier?

We will count the number of inversions directly, without worrying about what permutations they occur in:

Let Xij, i < j, be an indicator random variable for the event where A[i] > A[j] (they are inverted).

More precisely, define: Xij= I{A[i] > A[j]} for 1 ≤ i < j ≤ n.

Pr{Xij = 1} = 1/2 because given two distinct random numbers the probability that the first is bigger than the second is 1/2. (We don't care where they are in a permutation; just that we can easily identify the probabililty that they are out of order. Brilliant in its simplicity!)

By Lemma 1, E[Xij] = 1/2, and now we are ready to count.

Let X be the random variable denoting the total number of inverted pairs in

the array. X is the sum of all Xij that meet the constraint 1

≤ i < j ≤ n:

We want the expected number of inverted pairs, so take the expectation of

both sides:

Using linearity of expectation, we can simplify this far:

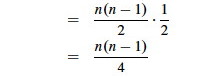

The fact that our nested summation is choosing 2 things out of n lets

us write this as:

We can use formula C.2 from the appendix:

In screencast 5A I show how to simplify this to (n(n−1))/2, resulting in:

Therefore the expected number of inverted pairs is n(n − 1)/4, or Θ(n2).

Above, we had to assume a distribution of inputs, but we may not have control over inputs.

An "adversary" can always mess up our assumptions by giving us worst case inputs. (This can be a fictional adversary used to make analytic arguments, or it can be a real one ...)

Randomized algorithms foil the adversary by imposing a distribution of inputs.

The modifiation to HIRE-ASSISTANT is trivial: add a line at the beginning that randomizes the list of candidates.

Having done so, the above analysis applies to give us expected value rather than average case.

Discuss: Summarize the difference between probabilistic analysis and randomized algorithms.

There are different ways to randomize algorithms. One way is to randomize the ordering of the input before we apply the original algorithm (as was suggested for HIRE-ASSISTANT above). A procedure for randomizing an array:

Randomize-In-Place(A) 1 n = A.length 2 for i = 1 to n 3 swap A[i] with A[Random(i,n)]

The text offers a proof that this produces a uniform random permutation. It is obviously Θ(n).

Another approach to randomization is to randomize choices made within the algorithm. This is the approach taken by Skip Lists ...

This is additional material, not found in your textbook. I introduce Skip Lists here for three reasons:

Motivation: Sometimes students who have seen binary search and are asked about efficiency of search of linked lists think they can apply binary search to linked lists. This does not work because one cannot have random access to the "middle" list cell like you can an array element. But can we modify linked lists to enable diving into the middle to get O(lg n) behavior?

Skip lists were first described by William Pugh. 1990. Skip lists: a probabilistic alternative to balanced trees. Commun. ACM 33, 6 (June 1990), 668-676. DOI=10.1145/78973.78977 http://doi.acm.org/10.1145/78973.78977 or ftp://ftp.cs.umd.edu/pub/skipLists/skiplists.pdf (actually he had a conference paper the year before, but the CACM version is more accessible).

My discussion below follows Goodrich & Tamassia (1998), Data Structures and Algorithms in Java, first edition, and uses images from their slides. Some details differ from the edition 4 version of the text.

Given a set S of items with distinct keys, a skip list is a series of lists S0, S1, ... Sh (as we shall see, h is the height) such that:

We can implement skip lists with "quad-nodes" that have above and below fields as well as the more familiar prev and next:

An algorithm for searching for a key k in a skip list as follows:

SkipSearch(k)

Input: search key k

Output: Position p in S such that the item at p has the largest key ≤ k.

Let p be the topmost-left position of S // which has at least -∞ and +∞

while below(p) ≠ null do

p = below(p) // drop down

while key (next(p)) ≤ k do

p = next(p) // scan forward

return p.

Example: Search for 78:

Notice that if the search fails a position containing a key other than k will be returned: we need to check the key of the position returned. Having this position will be useful when doing insertion.

Construction of a skip list is randomized:

The psuedocode provided by Goodrich & Tamassia uses a helper procedure InsertAfterAbove(p1, p2, k, d) (left as exercise), which inserts key k and data d after p1 and above p2. (The following omits code for returning "elements" not relevant here.)

SkipInsert(k,d)

Input: search key k and data d

Instance Variables: s is the start node of the skip list,

h is the height of the skip list, and n the number of entries

Output: None (list is modified to store d under k)

p = SkipSearch(k)

q = InsertAfterAbove(p, null, k, d) // we are at the bottom level

l = 0 // keeps track of level we are at

while random(0,1) ≤ 1/2 do

l = l + 1

if l ≥ h then // need to add a level

h = h + 1

t = next(s)

s = insertAfterAbove(null, s, −∞, null)

insertAfterAbove(s, t +∞, null)

while above(p) == null do

p = prev(p) // scan backwards to find tower

p = above(p) // jump higher

q = insertAfterAbove(p, q, k, d) // add new item to top of tower

n = n + 1.

For example, inserting key 15, when the randomization gave two "heads", forcing growth of h (for simplicity the figure does not include the above and below pointers):

Deletion requires finding and removing all occurrences, and removing all but one empty list if needed. Example for removing key 34:

The worst case performance of skip lists is very bad, but highly unlikely. Suppose random(0,1) is always less than 1/2. If there were no bound on the height of the data structure, SkipInsert would never exit! But this is as likely as an unending sequence of "heads" when flipping a fair coin.

If we do impose a bound h on the height of the list (h can be a function of n), the worst case is that every item is inserted at every level. Then searching, insertion and deletion is O(n+h): you not only have to search a list S0 of n items, as with conventional linked lists; you also have to go down h levels.

But the probabilistic analysis shows that the expected time is much better. This requires that we find the expected value of the height h:

The search time is proportional to the number of drop-down steps plus the number of scan-forward steps. The number of drop-down steps is the same as h or O(lg n). So, we need the number of scan-forward steps.

We will determine the expected number of scan-forward steps per level and multiply this by the number of levels.

In their textbook (1998), G&T provide this argument: Let Xi be the number of keys examined scanning forward at level i.

In their slides (2002), G&T provide this alternative analysis of the number of scan-forwards needed. The reasoning is very similar, but based on the odds of the list we encounter being constructed:

A similar analysis can be applied to insertion and deletion. Thus, skip lists are far superior to linked lists in performance.

Binary search of sorted arrays gives guaranteed O(lg n) performance, while for skip lists this is only the expected performance. However, skip lists are superior to sorted arrays for insertion and deletion, because to insert or delete in a sorted array one has to shift array elements, which costs O(n) per insertion or deletion. If all keys are inserted at once, it costs O(n lg n) to sort the array (something we prove in a few weeks).

G&T also show that the expected space requirement is O(n). They leave as an exercise the elimination of above and prev fields: if random(0,1) is called up to h times in advance of the insertion search, then one can insert the item "on the way down" as specified by the results.